Abstract

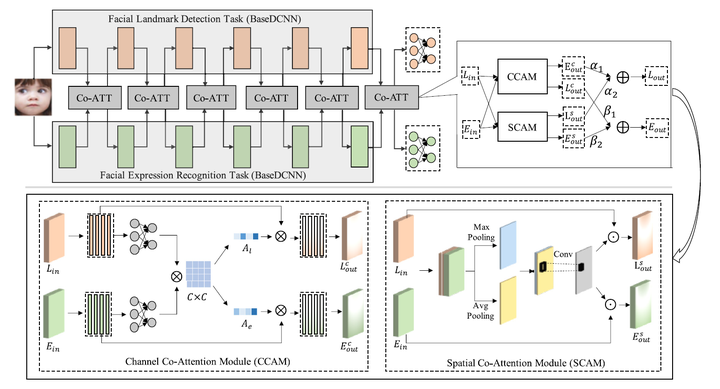

Previous research on Facial Expression Recognition (FER) assisted by facial landmarks mainly focused on single-task learning or hard-parameter sharing based multi-task learning. However, soft-parameter shar- ing based methods have not been explored in this area. Therefore, this paper adopts Facial Landmark Detection (FLD) as the auxiliary task and explores new multi-task learning strategies for FER. First, three classical multi-task structures, including Hard-Parameter Sharing (HPS), Cross-Stitch Network (CSN), and Partially Shared Multi-task Convolutional Neural Network (PS-MCNN), are used to verify the advantages of multi-task learning for FER. Then, we propose a new end-to-end Co-attentive Multi-task Convolutional Neural Network (CMCNN), which is composed of the Channel Co-Attention Module (CCAM) and the Spa- tial Co-Attention Module (SCAM). Functionally, the CCAM generates the channel co-attention scores by capturing the inter-dependencies of different channels between FER and FLD tasks. The SCAM combines the max- and average-pooling operations to formulate the spatial co-attention scores. Finally, we con- duct extensive experiments on four widely used benchmark facial expression databases, including RAF, SFEW2, CK+, and Oulu-CASIA. Extensive experimental results show that our approach achieves better performance than single-task and multi-task baselines, fully validating multi-task learning s effectiveness and generalizability.